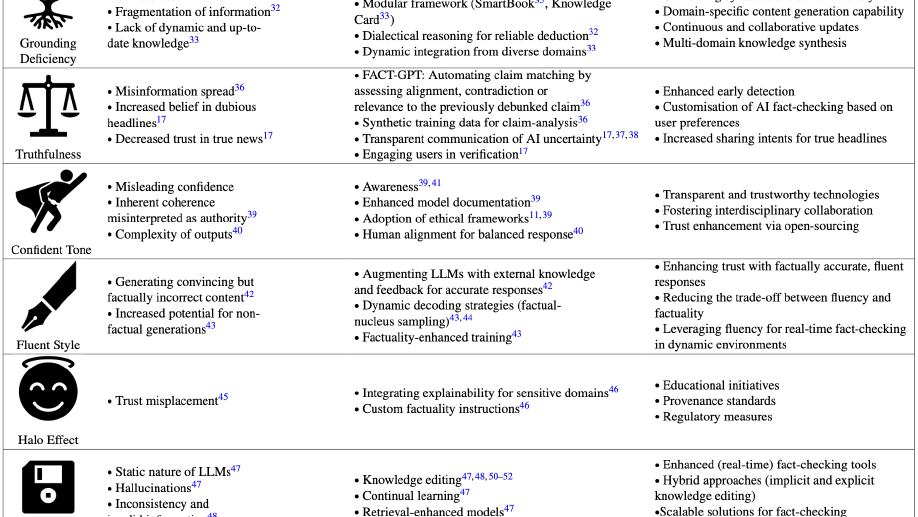

CopeNLU is a Natural Language Processing research group led by Isabelle Augenstein and Pepa Atanasova with a focus on researching methods for tasks that require a deep understanding of language, as opposed to shallow processing. We are affiliated with the Natural Language Processing Section, as well as with the Pioneer Centre for AI, at the Department of Computer Science, University of Copenhagen. We are interested in core methodology research on, among others, learning with limited training data and explainable AI; as well as applications thereof to tasks such as fact checking, gender bias detection and question answering. Our group is partly funded by an ERC Starting Grant on Explainable and Robust Automatic Fact Checking, as well as a Sapere Aude Research Leader fellowship on `Learning to Explain Attitudes on Social Media’.

Interests

- Natural Language Understanding

- Learning with Limited Labelled Data

- Explainable AI

- Fact Checking

- Question Answering

- Gender Bias Detection