Multi-lingual learning is concerned with training models to work well for multiple languages, including low-resource ones. We research methods for enabling information sharing between multiple languages, and also study how to utilise typological knowledge bases to this end. We are currently involved in three larger funded projects on this.

Multi3Generation is a COST Action that funds collaboration of researchers in Europe and abroad. The project is coordinated by Isabelle Augenstein, and its goals are to study language generation using multi-task, multilingual and multi-modal signals.

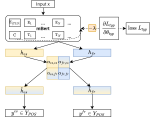

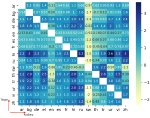

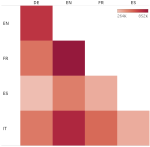

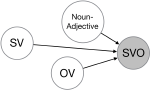

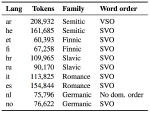

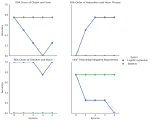

We are further partner in a research project funded by the Swedish Research Council coordinated by Robert Östling. Its goals are to study structured multilinguality, i.e. the idea of using language representations and typological knowledge bases to guide which information to share between specific languages.

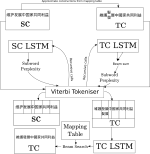

Lastly, Andrea Lekkas’ industrial PhD project with Ordbogen, supported by Innovation Fund Denmark, focuses on multilingual language modelling for developing writing assistants.